Today’s driver assistance systems help the driver to be aware of dangerous situations as well as act autonomously to prevent accidents. These active safety systems use advanced and sophisticated sensor technology with radar and cameras scanning ahead and around the vehicle all the time.

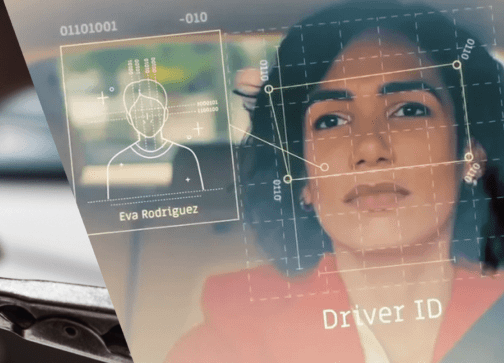

But as the saying goes, it’s not speed that kills but rather the person behind the wheel. And the next step in driver assistance systems is to look inwards at the driver. Polestar is one of the companies that will be offering a driver monitoring technology by Smart Eye as standard in its Polestar 3 SUV.

Designed to help avoid accidents and save lives, the Polestar 3 Driver Monitoring System (DMS) has 2 closed-loop premium driver monitoring cameras and software from Smart Eye which track the driver’s head, eye and eyelid movements. High-speed analysis by computer of these movements can trigger warning messages, sounds and even an emergency stop function when detecting a distracted, drowsy or disconnected driver.

Technology for next-generation vehicles

Smart Eye, which was founded in 1999, has been offering Driver Monitoring Systems and Interior Sensing solutions to a number of carmakers to use in their next-generation vehicles. A core technology developed by the company has been eye tracking technology. The technology draws on deep knowledge of the long chain that connects human actions and intentions; from the anatomy of the eye and face, to optics and intelligent software.

To make sense of the wealth of real-time data provided by its systems, Artificial Intelligence (AI) is used extensively to support the software and algorithms. AI is a necessary component when it comes to achieving the high standard for accuracy, predictability and stability. It’s also a critical factor for when eye tracking is used in challenging environments, where light conditions vary or the subject’s eyes are partly covered.

Tracking eye movements

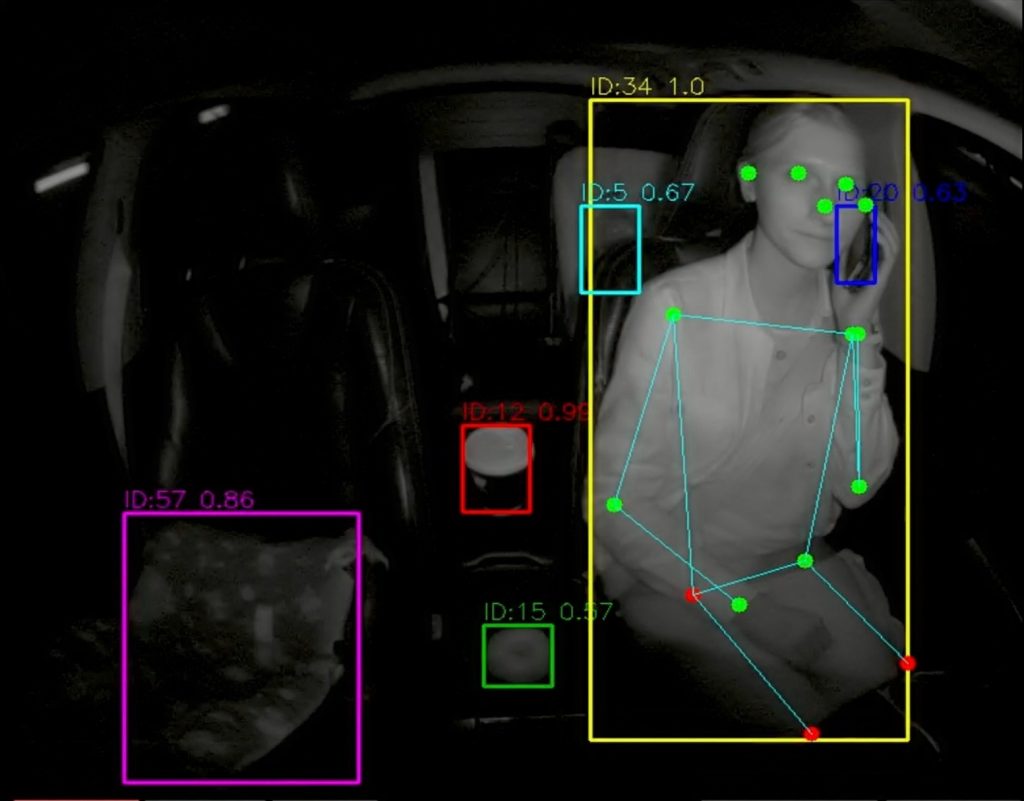

A basic explanation of a complex process is that infrared light is used to create a reflection in the eye’s cornea, that can be captured by a camera. To get a more accurate and robust tracking performance, each eye is tracked separately. Intelligent algorithms then identify the iris and pupil of each eye and weigh the two monocular feeds into a consensus gaze. Simultaneously, the software also detects, tracks and interprets the person’s facial features and head movements.

Over time, the system gets to know the face of each subject, learning more about each person while building a more detailed profile. This means that only a few features need to be visible in order to accurately determine head pose, even if the face is partially obscured. Multi-camera solutions also let the software triangulate the position and orientation of the head with greater accuracy, regardless of real-world conditions.

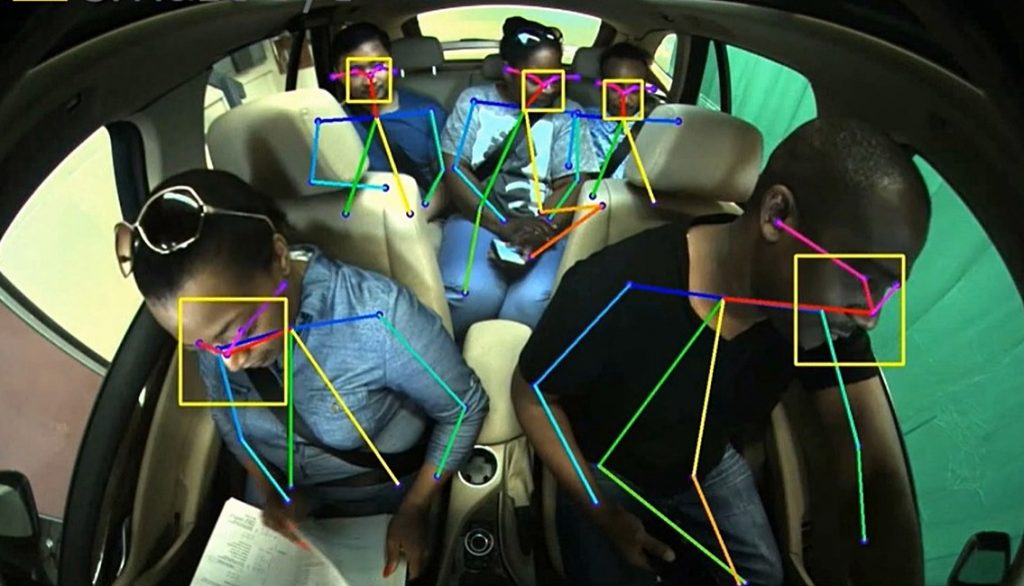

Eye, gaze and head tracking algorithms analyze the eye and head movements of the driver and passengers to determine their mental states and how they interact with the car interior or infotainment – detecting potential signs of drowsiness, distraction or intoxication.

Scanning the whole cabin

But while the sensors in Driver Monitoring System are focused on the driver, Interior Sensing systems gather information about the entire car interior. Using different types of algorithms, the system analyzes the different elements of the scene to gain a comprehensive understanding of what goes on in the car and with the people in it.

For example, action recognition algorithms recognize what the people in the car are doing, whether they are eating, smoking, putting on makeup, or sleeping. Face recognition algorithms learn to recognize the passengers present in the car, building up profiles to help the car understand the passengers’ personal preferences over time.

There are also gesture recognition algorithms which analyze the intentional (gestures for Human-Machine Interface interaction) and non-conscious body language of the driver and occupants. Emotion AI algorithms detect the nuanced emotions and complex cognitive states of the people in the car by analyzing facial expressions in context.

Understanding human behaviour

Why does a vehicle need to know what is happening to the people in it? By offering a deep, human-centric understanding of people’s behaviours in a car, Interior Sensing systems can greatly improve road safety. Through its driver monitoring functionalities, an Interior Sensing system can prevent accidents by detecting dangerous driving behaviour or even if the driver becomes suddenly ill.

By combining driver monitoring with cabin and occupant monitoring, the system enables a deeper understanding of what goes on in the entire vehicle. For example, the behaviour of other passengers in a car – like a baby crying or an argument in the back seat – can be a major cause of distraction for the driver. By getting to the root of what is taking the driver’s focus away from driving, the Interior Sensing system can detect inattention at an even earlier state and help guide appropriate interventions – further increasing the system’s chance of preventing an accident.